8 Load Balancing Algorithms You Should Know: Which One Fits Your System?

In today’s digital era, ensuring your system can handle thousands—or even millions—of requests daily is critical for any web, mobile, or distributed application. One of the most essential tools to achieve that is the Load Balancer.

But not all load balancing algorithms are created equal. So, how many types are there? What are their strengths and weaknesses? In this article, we’ll walk you through 8 popular load balancing algorithms and explain when each is most appropriate.

1. Round Robin

How it works:

Requests are distributed sequentially to each server in the pool, looping back to the first once it reaches the last.

Pros:

- Simple to implement.

- Works well when all servers are identical in capacity.

Cons:

- Doesn’t consider the current server load—can overload one that’s already busy.

Best for: Small systems with uniform server resources.

2. Least Connections

How it works:

Requests are sent to the server with the fewest active connections.

Pros:

- Ideal for long-lived connections.

- Helps prevent overloading busy servers.

Cons:

- Needs real-time connection tracking.

Best for: Real-time apps like chat, streaming, or heavy APIs.

3 . Weighted Round Robin

How it works:

Similar to Round Robin, but each server is assigned a weight. Servers with higher capacity handle more requests.

Pros:

- Better for environments where server resources differ.

Cons:

- Requires manual tuning of weights.

- Doesn’t adapt to real-time server performance.

4. Weighted Least Connections

How it works:

Combines Least Connections with weights. More powerful servers can handle more connections.

Pros:

- Balances actual usage with server capability.

Cons:

- More complex setup.

Best for: Heterogeneous environments with varying server strengths.

5. IP Hash

How it works:

Uses the client’s IP address to generate a hash, which maps to a specific server.

Pros:

- Maintains session persistence—requests from the same IP always go to the same server.

Cons:

- Can cause unbalanced load if IP distribution is uneven.

Best for: Systems requiring sticky sessions (e.g., shopping carts, user logins).

6. Random

How it works:

Requests are sent to randomly selected servers.

Pros:

- Very easy to implement.

Cons:

- Risk of uneven distribution and potential overload.

Best for: Lightweight systems, testing environments.

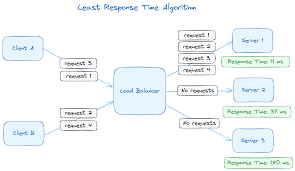

7. Least Response Time

How it works:

Routes traffic to the server with the shortest response time, measured in real-time.

Pros:

- Provides faster response to users.

Cons:

- Requires continuous performance monitoring.

Best for: Performance-critical systems like games or financial platforms.

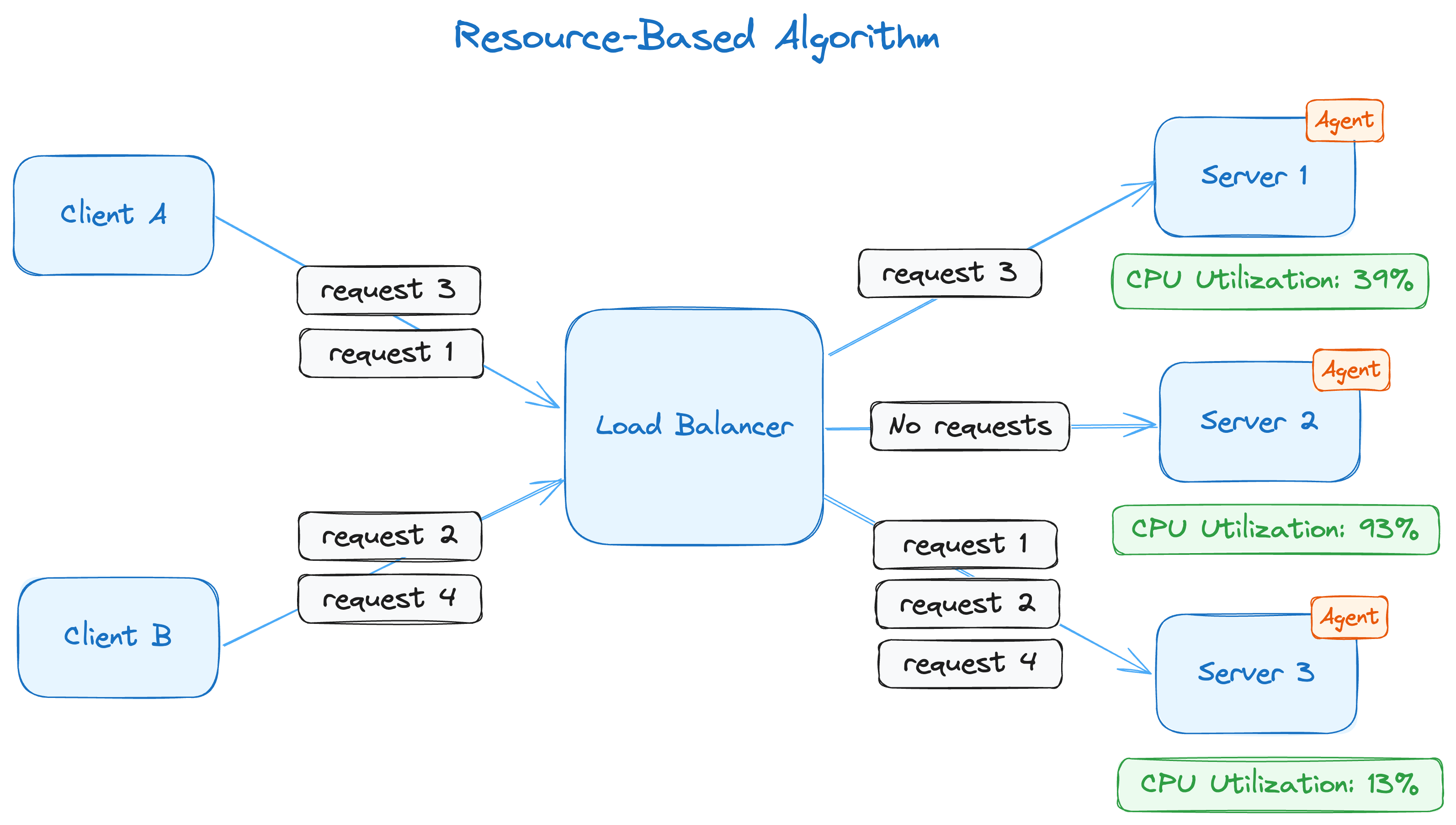

8. Resource-Based (Dynamic Load Balancing)

How it works:

Distributes traffic based on actual server resource usage (CPU, memory, bandwidth…).

Pros:

- Maximizes efficiency across the system.

- Adapts dynamically.

Cons:

- Complex to implement.

- Requires monitoring integration (e.g., Prometheus, Zabbix).

Best for: Cloud-native, containerized systems (Kubernetes, microservices).

Summary Comparison Table

| Algorithm | Simple | Sticky Sessions | High Performance | Handles Mixed Resources |

|---|---|---|---|---|

| Round Robin | ✅ | ❌ | ❌ | ❌ |

| Weighted Round Robin | ✅ | ❌ | ❌ | ✅ |

| Least Connections | ❌ | ❌ | ✅ | ✅ |

| Weighted Least Connections | ❌ | ❌ | ✅ | ✅ |

| IP Hash | ✅ | ✅ | ❌ | ❌ |

| Random | ✅ | ❌ | ❌ | ❌ |

| Least Response Time | ❌ | ❌ | ✅ | ✅ |

| Resource-Based | ❌ | ❌ | ✅ | ✅ |

Final Thoughts

There’s no one-size-fits-all algorithm. Choosing the right load balancing strategy depends on your application architecture, system size, and performance goals.

Most tools—like Nginx, HAProxy, and Kubernetes Ingress Controllers—support multiple load balancing algorithms. You can also integrate monitoring tools like Prometheus and Grafana to dynamically adjust traffic distribution in real-time.